Source: Getty

Click image to enlarge

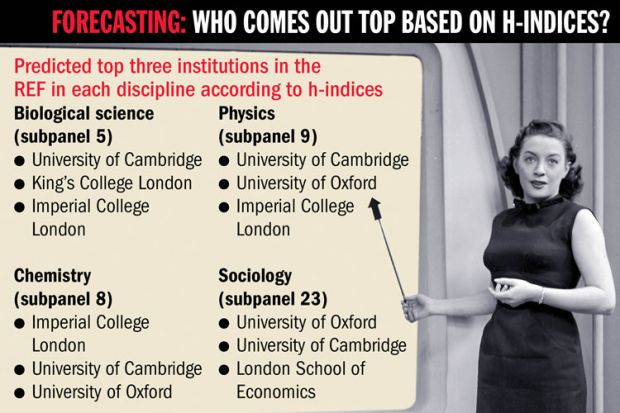

A team of researchers is hoping that its predictions of the results of the research excellence framework in four disciplines, based on the submitting departments’ “h-index”, will help to resolve whether the next REF should rely more heavily on metrics.

Broadly, the h-index measures the number of a department’s citations versus the number of academic papers it has produced. A department that has published 50 papers that have been cited 50 times or more has an h-index of 50. The index is sometimes preferred to average citation counts because it supposedly captures both productivity and quality.

Dorothy Bishop, professor of developmental neuropsychology at the University of Oxford, claimed in her blog last year that a ranking of psychology departments based on their h-indices over the assessment period of the 2008 research assessment exercise “predicted the RAE results remarkably well”.

This month, a paper was posted on the arXiv preprint server that reports similar correlations for another four units of assessment from 2008: chemistry, biology, sociology and physics. The paper, “Predicting results of the research excellence framework using departmental h-index”, claims that the correlation is particularly strong in chemistry and biology. It also uses calculations of departments’ h-indices over the REF assessment period to produce predictions for the 2014 REF, the actual results of which will be published on 18 December. One of the paper’s authors, Ralph Kenna, reader in mathematical physics at Coventry University, said that as the results are not yet known, the predictions could be considered to be unbiased.

Some subpanels in the 2014 REF are allowed to refer to metrics but not to rely on them. The Higher Education Funding Council for England has commissioned an independent review into the use of metrics in research assessment. Professor Bishop has made a submission supporting the use of departmental h-indices.

Noting that the calculation for psychology departments took her only about three hours, she wrote: “If all you want to do is to broadly rank order institutions into categories that determine how much funding they will get, then it seems to me it is a no-brainer to go for a method that could save us all from having to spend time on another REF.”

But Dr Kenna questioned whether a correlation between h-indices and peer-review rankings of even about 80 per cent, as he had calculated for chemistry, could be considered acceptable as that would still mean that somedepartments would suffer the “tragedy” of being inaccurately ranked. He also feared that the adoption of the h-index by the REF would amount to a “torpedo to curiosity-driven research” as researchers would seek to maximise their own indices.

“If we are honest, we hope the correlation doesn’t hold. But we are just trying to do a neutral job that anyone can check,” he said.

Register to continue

Why register?

- Registration is free and only takes a moment

- Once registered, you can read 3 articles a month

- Sign up for our newsletter

Subscribe

Or subscribe for unlimited access to:

- Unlimited access to news, views, insights & reviews

- Digital editions

- Digital access to THE’s university and college rankings analysis

Already registered or a current subscriber? Login