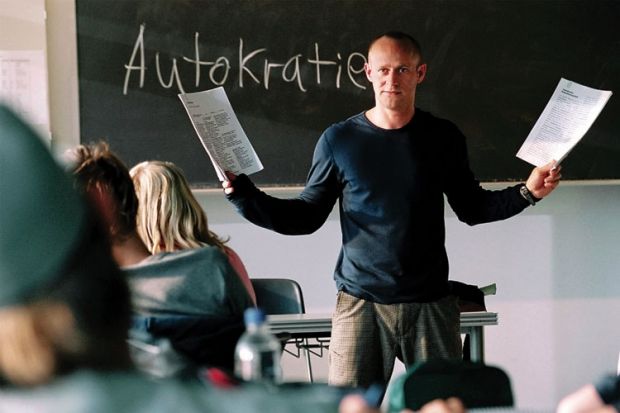

Source: Kobal

Clear marks: there are many features of teaching that students can reliably distinguish and agree about. Teachers’ colleagues can spot these features as well

“You can measure research, but you can’t measure teaching” is a common refrain. But what does the research evidence tell us about this issue? There has been more research on how to measure the effectiveness and quality of teaching, mainly by using questionnaires, than on any other aspect of higher education, and there is a consensus about the findings.

Can you measure an individual academic’s teaching performance?

Simply asking whether teaching is “good” is a question open to all kinds of varied interpretations by students about what the term means, and it can lead to wide variation in student responses. If, in contrast, you ask students about specific teacher behaviours that are known to affect learning, such as whether a teacher gives them prompt feedback on assignments, then students tend to agree closely with each other. Such questions distinguish well between teachers, and can be trusted and interpreted.

Conclusions about student feedback questionnaires can be found in substantial reviews and meta-analyses of thousands of studies, such as those by Herb Marsh, professor in the department of education at the University of Oxford.

There are many features of teaching that students can reliably distinguish on different occasions, and about which they agree with each other. Teachers’ colleagues can spot these features as well, and, moreover, their perceptions tend to agree with those of students. Judgements made about these features are stable over time and discriminate well between teachers. The view that students can recognise the value of teaching only in distant retrospect is contradicted by the available evidence: students do not usually change their minds later.

Students can readily distinguish between the teachers they like and the teachers they think are effective, and so this is not a beauty contest. There are some systematic biases in students’ responses, but they are not disabling provided that measures are compared sensibly. For example, comparing questionnaire scores for one teacher of a large-enrolment compulsory course with scores for another teacher of a small optional course would not be fair. Using questionnaire scores in personnel decisions is risky unless a range of other evidence is employed as well.

Well-developed questionnaires, such as the Student Evaluation of Educational Quality (SEEQ), created by Professor Marsh, produce scores relating to those aspects of teaching that students can reliably judge, which have been found to link reasonably closely to all kinds of outcomes of good teaching, such as effort and student marks. Consequently, measures can be valid as well as reliable.

Students may judge to be good those teachers who award them high marks. This problem is overcome in studies of large-enrolment courses where many teachers each teach their own small group in parallel, with all students then sitting the same exam, which is marked independently. It is usual to find differences in student marks between groups that can be attributed to measurable differences in student perceptions of their teachers.

It takes many years of rigorous research to develop a measure such as the SEEQ. Unfortunately, the student feedback questionnaires used in higher education in the UK tend to be “home-made”, include variables known not to be linked to student performance, lack any proof of reliability or validity and do not distinguish well between teachers or courses. Many are likely to be both untrustworthy and uninterpretable and deserve much of the criticism they receive.

Can you measure the quality of teaching across a whole degree programme?

In England’s increasingly marketised higher education sector, the primary focus has been on measuring students’ experience of degree programmes, rather than their experience of individual teachers – and this involves a great deal more than aggregating measurements of individual teachers.

Research has identified the characteristics of programmes that are associated with the greatest learning gains. The overall effect of these influential variables is to improve student engagement. As engagement predicts learning gains, degree programmes with the best teaching can be argued to be those that achieve the highest levels of engagement.

A US questionnaire, the National Survey of Student Engagement (NSSE) measures engagement and predicts learning gains well. If you use the NSSE to identify teaching quality problems, and use the research evidence to select and adopt alternative approaches to teaching that are associated with better engagement, then student engagement improves and learning gains improve.

The NSSE works so well as a measure of teaching, and as a strategy to improve teaching, that every year hundreds of US and Canadian institutions willingly pay to use it. The Higher Education Academy has piloted a short version of the NSSE in the UK.

Can you judge academics’ teaching performance when making promotion decisions?

When academics compete for promotion on the basis of research achievement, this does not involve measurement but judgement. And judging research is not an exact science, as evidenced by reviewers of my articles who have had diametrically opposed opinions. But at least when comparing researchers for promotion, expert judgements have already been made about articles and grants, and the overall judgement of the individual is based largely on these prior judgements.

In contrast, the main problem with judging teachers for promotion is that expert peer judgements have not normally been made at an earlier stage. In research universities where judgements of teaching are central to promotion decisions, such as Utrecht University and the University of Sydney, teachers must collect evidence of their performance over time, just as in the development a research CV. It has taken departments at these institutions a decade or more to work out how to judge such teaching evidence consistently and with confidence, and standards and expectations have risen as they have become better at it. Judging teaching is difficult and time-consuming, but probably no more difficult than judging research.

So it is possible to measure teaching both for individual teachers and for programmes, and it is possible to judge teaching for promotion decisions. Much of the usual criticism is justified, however, for the current teaching evaluation practices at many higher education institutions.

Register to continue

Why register?

- Registration is free and only takes a moment

- Once registered, you can read 3 articles a month

- Sign up for our newsletter

Subscribe

Or subscribe for unlimited access to:

- Unlimited access to news, views, insights & reviews

- Digital editions

- Digital access to THE’s university and college rankings analysis

Already registered or a current subscriber? Login