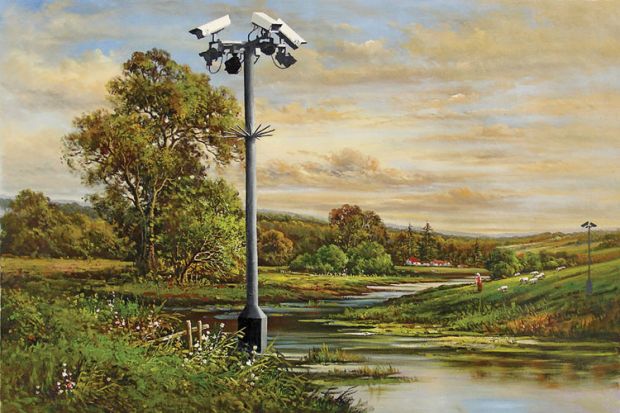

Source: Banksy

For those of us who believe in the virtues of the liberal university and the importance of academic freedom, these monitoring systems are dangerous

With the publication of yet another major review of university efficiency just months away, higher education institutions are under intense pressure to prove that they are spending their money wisely.

Vice-chancellors may have hoped that they had seen the last of the Treasury bean-counters after making the millions of pounds of savings recommended by Sir Ian Diamond, principal and vice-chancellor of the University of Aberdeen, in the 2011 Universities UK report Efficiency and Effectiveness in Higher Education.

But outsourcing IT services, privatising accommodation and sharing research equipment only goes so far towards reducing university costs. Last winter, Sir Ian was asked by the chancellor, George Osborne, to get the band of efficiency experts back together and deliver a new report into potential savings.

This time, the thorny subject will be personnel, which makes up 55 per cent of all higher education spending. To show that taxpayers are getting bang for their buck, university chiefs will turn to a bewildering array of data showing what staff do with their time and why they represent a good use of public money.

Among the data will be information drawn from the costing methodology known as Transparent Approach to Costing (Trac), which may also be used after next year’s general election to show how much teaching actually costs. Some vice-chancellors hope it will provide a strong case that the £9,000 tuition fee cap should be increased.

However, many rank-and-file academics view Trac as one of the many insidious ways in which academic activity is being monitored, assessed and controlled. They might add that the monitoring of performance, time and output now extends to almost every aspect of academic life.

Academics must submit returns to funders to justify the money and time spent on a project, while institution and departmental heads keep tabs on the impact factor of the journals that individual scholars publish in, as well as considering assorted perspectives on their citation performance, such as their h-index score. Such measures have allegedly been used by managers to decide which academics to enter for the research excellence framework. Research metrics have also been used to single out staff for redundancy, as in the controversial 2012 restructuring exercise at Queen Mary University of London’s schools of medicine and dentistry and chemical and biological sciences.

On the teaching front, students fill in module evaluation questionnaires (MEQs) for individual lecturers, and in many cases the latter must achieve minimum MEQ scores to pass probation. Departments are under pressure to do well in the National Student Survey, since NSS scores are one of the factors that affect placement in the national league tables published by newspapers.

Some universities have even mooted the idea of using student grades as part of staff appraisals, with academics to be judged by the number of students achieving a 2:1 or better in their modules, although the University of Surrey shelved such a proposal when concerns were raised that it could lead to degree inflation.

Steven Ward, professor of sociology at Western Connecticut State University and author of Neoliberalism and the Global Restructuring of Knowledge and Education (2012), says he has witnessed the creep of a vast range of “new public management tactics” in the US, too, since the 1980s.

“For those of us who believe in the virtues of the relatively autonomous, liberal university and the importance of academic freedom, these monitoring systems are quite dangerous,” he argues. “They begin to limit the type of research that takes place (applied over basic) and the type of teaching that occurs (individualised versus mass-produced).”

Ward, who penned a popular satire of performance monitoring that was published in Times Higher Education earlier this year (“Academic assessment gone mad”, Opinion, 6 February), complains that this new managerial style “requires lots of time-sucking box-ticking, report writing and number crunching that deflects from the core mission of teaching and research”. These systems also “require a type of cynical gaming” to ensure that one’s department does not end up on the wrong side of a benchmarking exercise, he adds.

In seeking to explain why this new type of performance management has arisen in the academy, replacing largely peer-based, departmental-centred forms of staff assessment, Ward points to the decline of the pre-tenure system in the US, in which a six-year period of intense checks is rewarded with tenure, followed by a significantly more laissez-faire approach to monitoring an academic’s activity. Nowadays some 70 per cent of US academic faculty do not have tenure – up from 57 per cent in 1993, according to the American Association of University Professors – and this allows the use of a more stringent set of performance measures.

While political calls for “accountability” have grown louder over the years, academics have good reasons to oppose a number of types of monitoring and metrics-driven performance assessment, says Amanda Goodall, senior lecturer in management at Cass Business School, City University London and research fellow at IZA Institute for the Study of Labor in Bonn.

‘We basically just enter what we think the department wants its staff’s time sheets to look like,’ says one academic who spoke anonymously

Studies have shown that creativity is severely inhibited by heavily monitored environments, says Goodall. She highlights a landmark 1967 study by University of Michigan psychologist Frank M. Andrews, “Creative ability, the laboratory environment, and scientific performance”, as well as more than 300 other articles on creativity and the work environment. These studies indicate that “people who work in creative research fields need a high degree of autonomy to develop their work – the most creative places tend to have high levels of autonomy”, Goodall says.

Yet to Goodall, while monitoring has generally gone too far, some form of monitoring and assessment can be positive. While the REF is frequently criticised for promoting a short-termist “publish or perish” culture, Goodall says she is a fan. “I quite like the fact that I know what I have to produce, which is to publish four good articles over a six-year period,” she says. “That is better than having an arbitrary decision [about required research output] made by a male academic in senior management,” she says.

University leaders would also assert that there are many positive sides to other monitoring methods, such as Trac, not least the extra money it has yielded for the sector. Introduced in 2000 in response to the Dearing report, Trac was designed to fix the chronic under-funding of research throughout the 1980s and 1990s by helping to set out its full economic cost. Previously, departments had been locked in lowest-bidder battles with their counterparts at other institutions to win a research contract. At the same time, both funders and universities ignored the hidden costs of research, such as long-term investment in buildings, overhead costs and support staff time. The extra investment associated with Trac – it has been “crucial in attracting enhanced funding into higher education to the tune of £1 billion per annum”, according to Stuart Palmer, chairman of the Trac Development Group – helped to fix the leaking roofs, crumbling buildings and outdated laboratories. Awarding funding according to the “full economic costing” (FEC) of research brought a 31 per cent uplift on average over old-style costing.

Under the Trac system, academic staff’s average work week is divided between teaching, research and other activities. More recently the model has been adjusted to allow teaching to be costed in more detail, in response to fears that it, too, was under-funded.

Palmer says that the costing of activities becomes “more important as financial constraints become tighter”. “Without such evidence, higher education can’t make the case for enhanced funding or demonstrate progress in increased efficiency,” says Palmer, a former deputy vice-chancellor at the University of Warwick. “Reliable costing is central to the debates about student fees, research funding, the sharing of expensive research equipment, investment in the teaching experience and the funding of capital investments.”

But Trac has had many critics, who say that its data – which seek to tally a university’s total spending with its constituent activities – are unreliable and lead to wrong-headed conclusions. That is partly down to assumptions about a 37.5 hour week, with workload then divided between various activities (such as 0.4 research, 0.4 teaching and 0.2 other activities). If an academic works on research over a weekend, their reported percentage of time spent on research will increase, and will fall for teaching – despite no material change in teaching hours. To a Trac time sheet, it would look as though the academic has effectively “robbed” teaching to pay for research – a frequent accusation levelled against university staff.

“We basically just enter what we think the department wants its staff’s time sheets to look like,” admits one academic, who spoke to THE anonymously and confesses that his actual workload bears little resemblance to that stated in his Trac return. Staff without external research funding are told “not to be too honest” about their research time as it may appear – wrongly – that their teaching duties are being neglected, he adds. Trac data give universities an unrealistic picture of the amount of time it takes to produce highquality research, he argues.

Even Trac’s defenders admit that there can be adverse consequences from its use, such as making a case for course closures or the scaling back of research. Peter Barrett, a former pro vice-chancellor for research and graduate studies at the University of Salford, has championed the use of Trac to help spread teaching and administrative duties more equitably across departments. “You can do it for equity reasons, but if you’re unlucky someone might get hold of the data and start closing a course or two because they are unprofitable,” says Barrett. “If you jump to close something every time it is unprofitable, then it is very dangerous.”

He cites as an example the fact that research seldom recoups its full costs but is of fundamental importance to a university’s work. “Research does not make money, but most universities still want to do it.”

John Robinson, finance director at Brunel University London, calls some of the decisions that have stemmed from Trac analysis “bonkers”. If a course is in deficit, it may simply require a little extra investment to break even, rather than simply cutting it, he says. This is because “the answer may be to get more students on to that course to generate more income”.

Trac’s administrative burden is another source of complaint for staff, although this is set to become less onerous in coming years after a sector-wide consultation resulted in an agreement to “streamline” its requirements. The number of institutions that no longer need to comply with the full Trac requirements doubles to about 60 this year after the research income threshold for full compliance was raised from £500,000 to £3 million.

And academic staff spend only two hours a year filling out Trac forms, according to the Trac development group. If that is correct, it may seem like a small price to pay if it justifies the vast sums of public money spent on higher education each year (£13.6 billion in 2011-12, according to UUK). While the burden on the administrative staff who chase up and compile the timesheets is higher, they increasingly do so by using the workload allocation sheets that are collected by institutions for their own internal checks, so there should be little duplication of effort, Trac’s supporters claim.

Elsewhere, many academics might point to tracking of their research output as one of the most immediate forms of staff monitoring. Researchers with high citation metrics or who have published in high-impact journals can be rewarded with more time for research, while those who score less highly may find themselves pushed towards more teaching duties. The owners of citation indexes such as Thomson Reuters’ Web of Science and Elsevier’s Scopus sell software that enables institutions more easily to compare their staff’s metrical merits against others in their field. Elsevier even sells an application, SciVal Strata, that helps research managers compare the performance of their own researchers with that of imaginary “dream teams” drawn from other institutions.

Academics spend only two hours a year filling out its forms, Trac says. If correct, it may seem a small price to pay to justify vast public expenditure

Academics’ behaviour has changed to fit this metrics-driven world, believes Barrett, even when this may not serve the general interests of academia – especially outside the sciences. “The pressure to publish in highly cited journals means papers do not always appear in the most suitable publications,” he says.

This pressure may also push someone to publish a series of articles even though a longer-term project, such as a book, might have greater impact on a discipline, Barrett argues, adding: “Metrics works quite well for science, but I don’t think it works as well for other disciplines.”

But Lisa Colledge, Elsevier’s project director for the benchmarking initiative known as Snowball Metrics, is far more positive about what metrics can help institutions and academics to achieve. Snowball was initiated by John Green, retired chief coordinating officer of Imperial College London, as a way for university managers to be sure that when they benchmark their institution’s performance against that of other similar institutions, they are comparing like with like. The project is being driven by a core group of eight UK universities (plus another seven in the US and eight in Australia and New Zealand), with pro-bono management help from Elsevier, but the “recipes” they come up with for how data should be processed are available openly; the first “recipe book” was published in 2012 and a new edition was published earlier this year.

Snowball Metrics recipes cover areas such as citation scores, productivity and links with industry. The idea is that this will make it easier for managers to identify strengths and weaknesses at departmental or institutional level, allowing better decisions to be made about what to invest in. Conversely, academics in those areas identified as performing relatively poorly might be asked to change their focus, or receive less or even no institutional funding in the future.

While this might sound worrying to some researchers, the trick is to ensure that universities benchmark a broad range of indicators because they require a variety of different strengths to succeed as an institution, Colledge says. “I was visiting Taiwan recently, where researchers are very good at publishing in important journals, but they are worried because they are falling behind in other areas of performance,” she says, pointing to the importance of having good teaching, strong international links and collaboration with industry. “It is unlikely that any one academic is good at all of these things, but a university needs all of these qualities,” she says.

Some of the metrics set to be analysed by Snowball Metrics participants, including the universities of Cambridge and Oxford, might surprise scholars. For example, information is now being gathered, using Google Analytics, on how many times institutions are mentioned not just in the news but also on Facebook and Twitter. This is taken to be a measure of the social impact of research and other activities. Colledge says she was surprised that “such traditional, well-established institutions are so keen to look at alternative metrics”, but views it as understandable that universities “want to know how far research is going beyond the academic sphere”.

To those who are alarmed to learn that their departments are being judged by a new set of criteria, Snowball benchmarking will apply only at an institutional or departmental level. Yet once researchers cotton on to the fact that performing well on these metrics could help their academic careers, they could seize upon another way “to promote their own performance and fight for laboratory space or equipment”, Colledge says.

Academics are also obliged to submit a range of data to funders relating to the outcomes of their projects. From this month, all UK research councils – plus many charitable funders – must use the Researchfish system, which was developed in 2008-09 in collaboration with the Medical Research Council. According to Frances Buck, its co-founder, spokesperson and sales director, the system is unlikely to add to researchers’ reporting burden, given that research intensive universities have already invested millions in setting up their own internal outcome-tracking systems; Researchfish merely unifies institutions’ approaches.

While Buck is aware that “outcomes fatigue” is prevalent in the academy, she argues that Researchfish will have huge benefits for funders who are keen to see how their money has been used. “By presenting data in a consistent format it is easier to identify patterns than [comparing] large narratives in Word documents,” she says. With Researchfish, a quick scan would easily show, for instance, how many school engagement activities had been delivered by a certain set of projects. It might identify early on whether a project is doing well and merits further funding, and it could also help to avoid duplication of research or suggest areas of collaboration, Buck adds.

Whether by universities, research councils, charities, industry or students, it is often argued that academics are now more checked and monitored than ever before, and called to account for their time and expenditure at every turn. But is it really necessary? Koen Lamberts, vice-chancellor of the University of York, believes so. “When student numbers and research funding were very stable and secure, universities didn’t need to use performance indicators,” he says. “They had relatively little control over how they created a portfolio of income, but now funding is more driven by these measures – particularly research income, which is extremely competitive.” Ward is unconvinced. “Universities have always [been held] accountable, despite what neoliberal politicians argue,” he says. “It is just a question of in what ways, with what mechanism and to whom – I would prefer a ‘lighter’ system based in the profession, rather than the heavy-handed and self-defeating ones that are imposed from the outside”.