The numbers are in: more than 17,500 academics from 137 countries completed the Academic Reputation Survey that will be used to inform the forthcoming 2011-12 Times Higher Education World University Rankings, we can reveal today.

The final figures, confirmed by our rankings’ data supplier Thomson Reuters, represent a 31 per cent increase on last year’s survey responses. They confirm that just under 31,000 experienced academics from 149 countries have engaged directly with the survey in the two years it has been running.

This is great news and testament to the widespread engagement with our university rankings from experienced scholars all over the globe. All respondents have helped us to build a serious and authoritative piece of research to inform what have become the world’s most widely cited university rankings.

In total, there were 17,554 respondents compared with 13,388 in 2010, making this exercise by far the largest of its kind ever undertaken.

A crucial innovation of the THE World University Rankings, reformed and revamped in 2010, is that no one who responded last year was invited to take part this year. This means that only the most up to date reputational data will be used in any given year, allowing for meaningful comparisons of the information over time.

The survey is invitation only and academics are targeted to be statistically representative of their geographical region and discipline. They are questioned about their narrow fields and are asked to respond based on their direct experience.

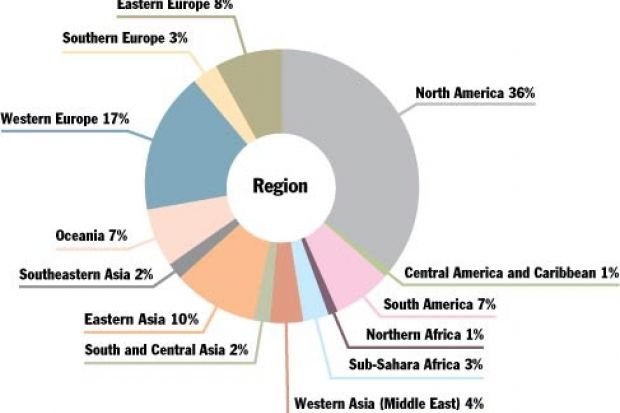

Some 44 per cent of respondents in 2011 were from the Americas, 28 per cent from Europe and 25 per cent from Asia Pacific and the Middle East. Ninety per cent of respondents described their role as an academic, researcher or institutional leader and respondents had spent on average 16 years in the academy.

There was an excellent balanced spread across the disciplines: the physical sciences and engineering and technology took about 20 per cent of the responses each, with 19 per cent for social sciences, 17 per cent for clinical subjects, 16 per cent for life sciences and 7 per cent for the arts and humanities.

The 2011 data will be used alongside several other indicators to help build the 2011-12 World University Rankings, to be published in September, and will also be published in isolation from other performance indicators in early 2012 in the second of THE’s annual World Reputation Rankings.

The responses will also form a component of the Thomson Reuters Institutional Profiles product allowing detailed comparative profiling of hundreds of universities around the world.

Commentary

It is not surprising that any analysis of “reputation” comes in for a lot of criticism from users as well as institutions. On what basis is any individual assessment made?

First, any summative analysis of a university is inevitably compromised: after all, how can you sum up all its diversity and complexity in a few numbers? Second, individual judgement may be based on limited information, at a distance, about a single subject area within multiple faculties. This is not very meaningful.

These weaknesses have hamstrung the university reputation surveys published in the past. The subject spread and the status of the respondents were unknown, the geographical spread was unclear and certainly not taken into account, and the basis on which judgements were made was untested.

Thomson Reuters has worked with Ipsos to develop a structure for a survey that starts to address these problems. And with last year’s and this year’s results, we now have two large, structured and independent samples to enable us to report to you in a more informed way than has hitherto been possible.

The people contacted for our reputation survey have all authored at least one research paper and 90 per cent are academic staff. The majority appear in Thomson Reuters Web of Knowledge and we’ve supplemented that to increase the survey’s subject coverage. In most cases we know which discipline the academics published in and whether they published once or many times. We know where they are working, by institution as well as region. And the people we contacted this year were a different sample than last year.

We also asked people to list the institutions they rated most highly by thinking about real action – such as recommendations to students, job choices or collaboration.

Last year we had more than 13,000 respondents; this year we have had more than 17,500. But we are not making the schoolboy error of throwing all 31,000 in a pot together. We are going to use this year’s sample for this year’s analysis.

Later we will report to you on how the two samples compare, and we will start to dig into some of the underlying structure. And in a few years’ time, we will be able to tell you whose reputations have risen and whose bubbles have burst.

Jonathan Adams is director, research evaluation, Thomson Reuters.

Register to continue

Why register?

- Registration is free and only takes a moment

- Once registered, you can read 3 articles a month

- Sign up for our newsletter

Subscribe

Or subscribe for unlimited access to:

- Unlimited access to news, views, insights & reviews

- Digital editions

- Digital access to THE’s university and college rankings analysis

Already registered or a current subscriber? Login