Details of the proposed new methodology for the Times Higher Education World University Rankings have been unveiled.

THE confirmed this week that it plans to use 13 separate performance indicators to compile the league tables for 2010 and beyond - an increase from just six measures used under the methodology employed between 2004 and 2009.

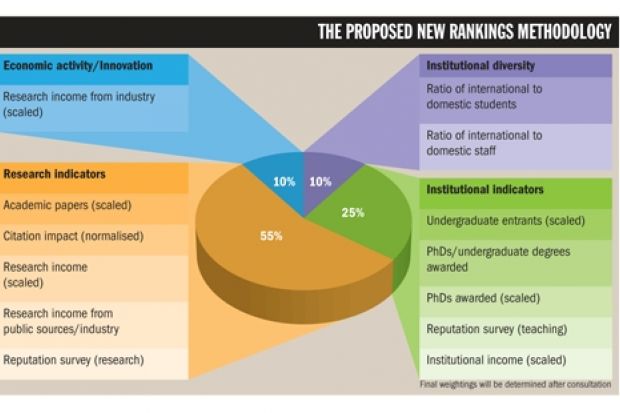

The wide range of individual indicators will be grouped to create four broad overall indicators to produce the final ranking score.

The core aspects of a university's activities that will be assessed are research, economic activity and innovation, international diversity, and a broad "institutional indicator" including data on teaching reputation, institutional income and student and staff numbers.

"The general approach is to decrease reliance on individual indicators, and to have a basket of indicators grouped across broad categories related to the function and mission of higher education institutions," said Thomson Reuters, the rankings data provider. "The advantage of multiple indicators is that overall accuracy is improved."

THE announced last November that it had ended its arrangement with the company QS, which supplied ranking data between 2004 and 2009. It said it would develop a new methodology, in consultation with Thomson Reuters, and with advisers and readers, to make the rankings "more rigorous, balanced, sophisticated and transparent".

The first detailed draft of the methodology was this week sent out for consultation with THE's editorial board of international higher education experts.

They include: Steve Smith, president of Universities UK; Ian Diamond, the former chief executive of the Economic and Social Research Council and now vice-chancellor of the University of Aberdeen; Simon Marginson, professor of higher education at the University of Melbourne; and Philip Altbach, director of the Center for International Higher Education at Boston College. A wider "platform group" of about 40 university heads is also being consulted.

The feedback will inform the final methodology, to be announced before the publication of the 2010 world rankings in the autumn.

Indicators in detail

While the old THE-QS methodology used six indicators - with a 40 per cent weighting for the subjective results of a reputation survey and a 20 per cent weighting for a staff-student ratio measure - the new methodology will employ up to 13 indicators, which may later rise to 16.

For "research", likely to be the most heavily weighted of the four broad indicators, five indicators are suggested, drawing on Thomson Reuters' research paper databases.

This category would include citation impact, looking at the number of citations for each paper produced at an institution to indicate the influence of its research output.

It would also include a lower-weighted measure of the volume of research from each institution, counting the number of papers produced per member of research staff.

The category would also look at an institution's research income, scaled against research staff numbers, and the results of a global survey asking academics to rate universities in their field, based on their reputation for research excellence.

"Institutional indicators" would include the results of the reputation survey on teaching excellence and would look at an institution's overall income scaled against staff numbers, as well as data on undergraduate numbers and the proportion of PhDs awarded against undergraduate degrees awarded.

For 2010, the "economic/innovation" indicator would use data on research income from industry, scaled against research staff numbers. In future years, it is likely it would include data on the volume of papers co-authored with industrial partners and a subjective examination of employers' perceptions of graduates.

Institutional diversity would be examined by looking at the ratio of international to domestic students, and the ratio of international to domestic staff. It may also include a measure of research papers co-authored with international partners.

Ann Mroz, editor of Times Higher Education, said: "Because global rankings have become so extraordinarily influential, I felt I had a responsibility to respond to criticisms of our rankings and to improve them so they can serve as the serious evaluation tool that universities and governments want them to be.

"This draft methodology shows that we are delivering on our promise to produce a more rigorous, sophisticated set of rankings. We have opened the methodology up to wide consultation with world experts, and we will respond to their advice in developing a new system that we believe will make sense to the sector, and will be much more valuable to them as a result."

THE PROPOSED NEW RANKINGS METHODOLOGY

10% Economic activity/Innovation

Research income from industry (scaled against staff numbers)

10% International diversity

Ratio of international to domestic students

Ratio of international to domestic staff

25% Institutional indicators

Undergraduate entrants (scaled against academic staff numbers)

PhDs/undergraduate degrees awarded

PhDs awarded (scaled)

Reputation survey (teaching)

Institutional income (scaled)

55% Research indicators

Academic papers (scaled)

Citation impact (normalised by subject)

Research income (scaled)

Research income from public sources/industry

Reputation survey (research).

Register to continue

Why register?

- Registration is free and only takes a moment

- Once registered, you can read 3 articles a month

- Sign up for our newsletter

Subscribe

Or subscribe for unlimited access to:

- Unlimited access to news, views, insights & reviews

- Digital editions

- Digital access to THE’s university and college rankings analysis

Already registered or a current subscriber? Login