Source: James Fryer

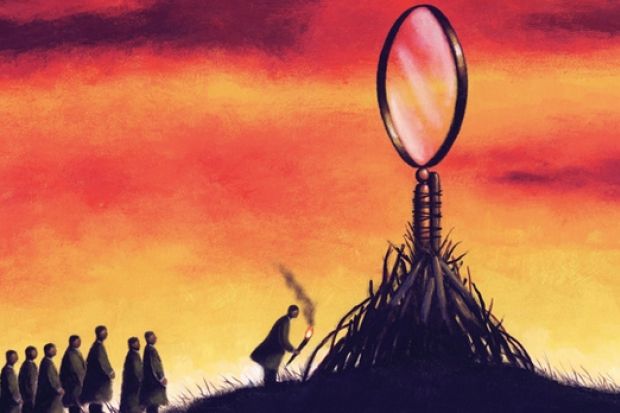

“Don’t let them ban telescopes,” I hear Copernicus muttering as he writhes beneath the grass.

Perhaps we should have expected it, but the research excellence framework is going to have dangerously subjective, anti-evidential features. In many academic disciplines, data will be banned.

Here, in the jargon of the REF documentation, are two examples of the problem: “(Subpanels) 16, 19, 20, 21, 22, 23, 24, 25 and 26 will neither receive nor make use of citation data, (nor) any other form of bibliometric analysis.”

And: “No (subpanel) will use journal Impact Factors (nor) any hierarchy of journals in their assessment of outputs.”

In regular English, what does this mean? It means, sadly, that evidence is out and subjectivity is in. If you are a UK scholar, a tiny number of people - at a guess, only two readers, because the size of the REF exercise precludes more - will judge your work post- 2008. They will do so in any way they feel is appropriate - and without recourse to a clear standard of objectivity or transparency, or compared against any unambiguous data.

In large numbers of cases (for example, in almost all of the UK social sciences apart from economics and a few parts of geography), these assessors will be unencumbered by facts. Moreover, unlike the REF panel for chemistry or for mathematics, those assessment panels have chosen to be so unencumbered. It is unsettlingly reminiscent of anti-Copernicans who did their best to ban devices that could check empirically on their faith-based theory that the Earth lies in the centre of the Universe.

Worse, there is rejoicing about this decision to turn one’s face against evidence. It is easy to find websites, particularly ones written by humanities professors, pleased by the REF’s design. “The dreaded ‘metrics’ have been banished,” as one site puts it. But would you want to be operated on in a medical procedure for which there is no empirical support?

I wonder if David Willetts is aware that large numbers of REF 2014 panels have created a setting in which aged biases and subjective whims can be brought to bear. Because of the latest rubric, nobody on an REF assessment panel in spring 2014 will be able to say: “Hey, wait a minute. It is not reasonable to give a low grade to this journal article just because you don’t happen to like it; the damn thing appeared in the American Sociological Review, an elite publication, has been cited 100 times since and not one of those citations is critical of the work. You may not approve of the article but a heck of a lot of people clearly do.”

Such statements will be struck from the record.

In the majority of REF evaluation panels, one or two anonymous assessors’ views will now be decisive, whether or not they have published in that exact area of research, and even though their personal views cannot be traced back publicly to them. That is a system that carries with it the possibility of systematic intellectual corruption.

In promotion cases, I have heard a senior professor at a distinguished university say something along the lines of: “I will never countenance any use of citations data and all journal rankings should be ignored.” What the speaker did not seem to notice is that this presumed that he alone knew better than journal referees and journal readers.

The REF is thus in some danger. The principle of evidence-based policy in higher education is not going to apply to large parts of it. That is a shame and a worry.

A fine article, “The assessment of research quality in UK universities: Peer review or metrics?”, appeared earlier this year in the British Journal of Management. Written by Jim Taylor, emeritus professor of economics at Lancaster University, it explains that the value of citations in research assessment exercises is that they provide some bulwark against biases and sheer subjectivity.

This does not mean that citations data or journal rankings should be used unthinkingly or with no element of human judgement. In my opinion, it would not be sensible to adopt a system where, for example, a computer did the REF by adding up points on a mechanical checklist.

Telescopes provide only one kind of evidence and if used incorrectly can lead to incorrect conclusions. But that is not a reason to ban them.

Register to continue

Why register?

- Registration is free and only takes a moment

- Once registered, you can read 3 articles a month

- Sign up for our newsletter

Subscribe

Or subscribe for unlimited access to:

- Unlimited access to news, views, insights & reviews

- Digital editions

- Digital access to THE’s university and college rankings analysis

Already registered or a current subscriber? Login